Anyone who thinks this is remotely possible or a good idea has no idea what healthcare providers actually do on a day to day basis- especially in inpatient settings like hospitals

So the question is do the hospital administrators have any idea what healthcare providers actually do on a day to day basis

When it’s gonna cost them $81/hour less per nurse, i don’t think it’s even gonna matter. They’ll let someone else will deal with the fallout

Yeah. Everything is a calculated business decision.

They’ll look at the laws, the penalties, and do whatever they believe will maximize profit.

Boeing did the same thing when they cut corners and killed over 300 people.

Narrator : A new car built by my company leaves somewhere traveling at 60 mph. The rear differential locks up. The car crashes and burns with everyone trapped inside. Now, should we initiate a recall? Take the number of vehicles in the field, A, multiply by the probable rate of failure, B, multiply by the average out-of-court settlement, C. A times B times C equals X. If X is less than the cost of a recall, we don’t do one.

Woman on Plane : Are there a lot of these kinds of accidents?

Narrator : You wouldn’t believe.

Woman on Plane : Which car company do you work for?

Narrator : A major one.

Fight Club

My spouse is an ER doctor here in the US. The answer is no. They don’t buy hospitals to take care of patients. They buy them to make a huge profit that the absolute state of the US healthcare system lets them get away with (private medicine and insurance, not the nurses and doctors working within it, to be clear).

The fuckery those assholes invent that adversely effect patient care for the sake of increasing profit margins is wild and infuriating to watch.

I agree that nurses are invaluable and irreplaceable and that no AI is going to be able to replicate what a human’s judgement can do. But honestly it’ll be the same as what our hospital’s “nursing line” offers us right now. You call and they ask scripted questions and give you scripted responses which usually ends up with them recommending that you go in. I get that it’s for liability but after 2 calls for our newborn we stopped calling and just started making our own judgement. But for actual inpatient settings? Absolutely no way. There’s no replacement for actual healthcare providers.

They should use AI to help the folks in medical billing.

An AI chatbot that will continually call the insurance company until your procedure gets reimbursed.

The article is talking about video call consultations with nurses. Read the article or argue the point.

Nvidia has never seen a nurse and has no idea what they do

And the private equities that own hospitals will purchase this anyway.

Can’t wait for the wave of lawsuits after the ai hallucinantes lethal advice then insists it’s right.

Reminds me of an AI that was programmed to play Tetris and survive for as long as possible. So the machine simply paused the game. Except in this case, it might decide the easiest way to end your suffering is to kill you, so slightly different stakes.

As soon as I work out that my nurse is not a real person, im ending the communication. I am not paying a GPU for healthcare.

Too bad it’s going to be up to your insurance, lol.

Fuck this shitty country and the greedy useful idiots that inhabit it.

I will send forth my own AI avatar to battle the nurse

I wonder if insurance is going to be okay with an AI (famous for never making a mistake /s) being involved in healthcare? If a human nurse makes a mistake the insurance can sue them and their malpractice insurance, if the AI makes a mistake, who can they blame and go after?

If insurance companies refuse to pay for AI nurses, hospitals cant use them?

Ultimately the hospital is responsible for any mistakes their AI makes, so I suppose the insurance company would sue the hospital.

No, you should start saying nonsense and see what that gets you. “My chicken just coagulated”

Garbage… We have had services with real nurses doing telemedicine and it tends to suck

Essentially, the lack of actual information from a video chat (as opposed to an in person meeting), coupled with the “better cover the company’s ass and not get sued”, devolves into every call ending in “better go to the ER to be safe”

Telemedicine is fantastic and an amazing advancement in medical treatment. It’s just that people keep trying to use it for things it’s not good at and probably never will be good at.

For reference, here’s what telemedicine is good at:

- Refilling prescriptions. “Has anything changed?” “Nope”: You get a refill.

- Getting new prescriptions for conditions that don’t really need a new diagnosis (e.g. someone that occasionally has flare-ups of psoriasis or occasional symptoms of other things).

- Diagnosing blatantly obvious medical problems. “Doctor, it hurts when I do this!” “Yeah, don’t do that.”

- Answering simple questions like, “can I take ibuprofen if I just took a cold medicine that contains acetaminophen?”

- Therapy (duh). Do you really need to sit directly across from the therapist for them to talk to you? For some problems, sure. Most? Probably not.

It’s never going to replace a nurse or doctor completely (someone has to listen to you breathe deeply and bonk your knee). However, with advancements in medical testing it may be possible that telemedicine could diagnose and treat more conditions in the future.

Using an Nvidia Nurse™ to do something like answering questions about medications seems fine. Such things have direct, factual answers and often simple instructions. An AI nurse could even be able to check the patient’s entire medical history (which could be lengthy) in milliseconds in order to determine if a particular medication or course or action might not be best for a particular patient.

There’s lots of room for improvement and efficiency gains in medicine. AI could be the prescription we need.

Yes, I was a bit too extreme with my answer above, however, you’ll be hard pressed to find people who don’t already know, to formulate such a good question as:

can I take ibuprofen if I just took a cold medicine that contains acetaminophen?"

Refilling meds, absolutely… As long as the AI has access and can accurately interpret your medical history

This subject is super nuanced but the gist of the matter is that, at the moment, AI has been super hyped and it’s only in the best interest of the people pumping this hype to keep the bubble growing. As such, Nvidia selling us the opportunities in AI, is like wolves telling us how Delicious, and morally sound it is to eat sheep 3 times daily

Oh and I don’t know what kind of “therapy” you were referring to… But any psy therapy simple cannot be done by AI… You might as well tell people to get a dog or “when you feel down, smile”

I want to replace Nvidia executives with AI for $9/hr. Wait, that’s overkill for those morons.

Given these things still hallucinate quite frequently I don’t understand how this isn’t a massive risk for Nvidia. I also don’t find it impossible to imagine doctors and patients refusing to go to hospitals or clinics with these implemented.

Oh, they don’t want to automate the risk ofc. Just the profitable bits of nursing and then have one nurse left that does all the risk based stuff

Time to learn how to root your AI nurse to get infinite morphine.

nice, automated medical racism

If they can take away drudge work that hospitals force nurses to do and let human nurses do more in-person work (AI can not deliver a baby, for example), good, right?

Assuming they only put the AI on tasks where the AI is as good as a human or better because they will get sued if it makes a mistake, then this is just the same health care for cheaper to me. That’s good. We need cheaper healthcare.

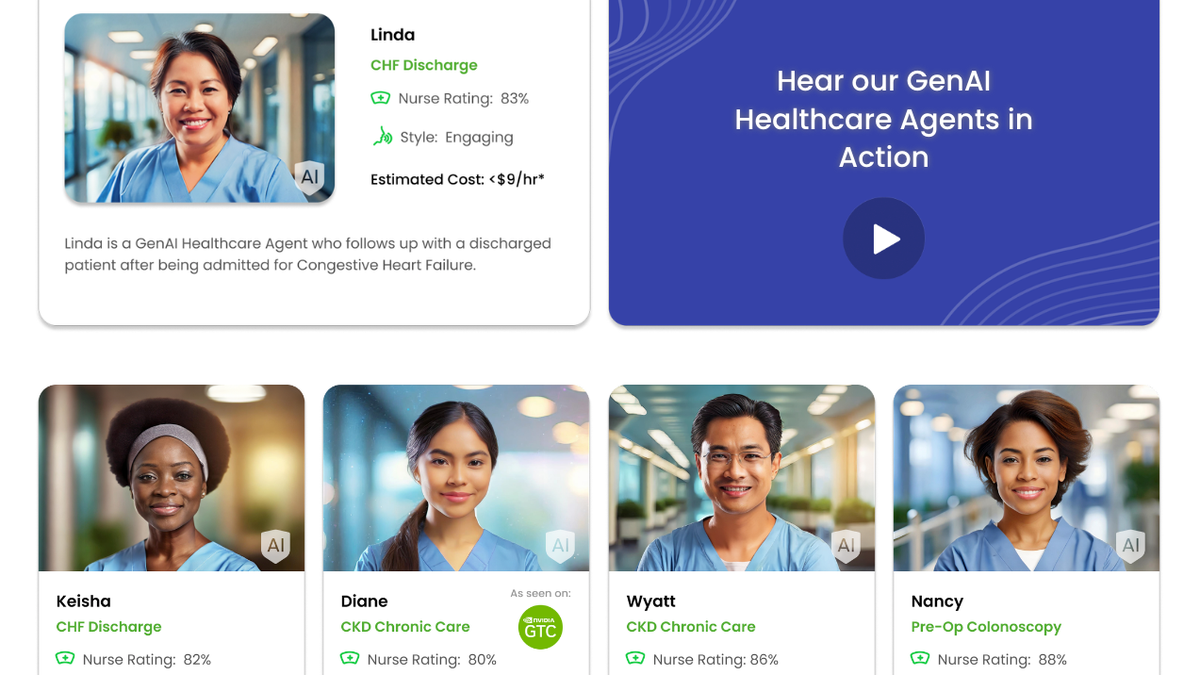

Hippocratic promotes how it can undercut real human nurses, who can cost $90 an hour, with its cheap AI agents that offer medical advice to patients over video calls in real-time.

Kind of.

Absolutely stupid. Some business major is going to promote this and get their hospital shut down for malpractice.

Based on recent experiences in the medical system, AI replacements will probably be an improvement.

My healthcare all rolled over like it does every single year… Except for my prescription plan. I had to register an account on their terrible website for both my wife and I before they would allow us to use our plan.

I wish we had a way of leveraging these technologies minus capitalism. AI could solve a lot of problems and offer interesting information to real people, but the greedy removed at the top are just going to use it as a way to finish off the middle class.

I long for the world of Star Trek where our needs are met and we can focus on our lives and interests, but I am too cynical to think that will ever be allowed by the people who run things and their addiction to profit.

Hippocratic AI. That’s hilarious

I foresee no problems with this plan. Surely this won’t result in a string of completely avoidable deaths.

Of course we should! We replaced our doctors with hourly rentable books back when that tech became popular. /s

If nothing else it would be fucking hilarious observing the interaction between a state of the art LLM with ML baked into it and an 80 y/o grandpa who just shit his pants and needs help